HOW TO: Configure WordPress to support multiple Environments

IMPORTANT: Only compatible with WordPress 5.5 and above.

Why should I care about WordPress environments?

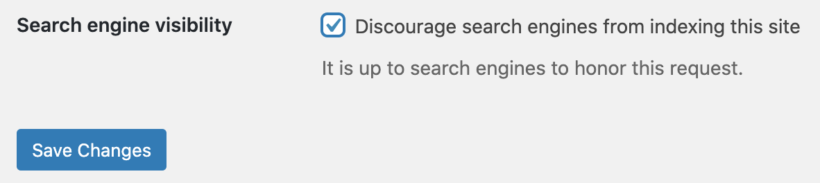

Suppose you have a development version of your WordPress site (like dev.403page.com) and you have the Discourage search engines from indexing this site checkbox clicked (in wp-admin > Settings > Reading):

If that option isn't also set on the production site (ie. 403page.com) - which it shouldn't be - the next time you deploy from production to development, the option is now off and you dev URLs may start popping up in Google!

Even worse, if you deploy the other way around and don't remember to uncheck that box - your site may start to fade from search engine rankings long before you even realise.

What if your WordPress site had a way to know which instance it was loading on, and execute different code accordingly?

That way you can keep the code identical on all environments for easy deploys in either direction - enabling/disabling/executing specific features automatically based on where they are.

Enter wp_get_environment_type!

... well actually, there was already a few ways to do this. In fact, the example I'm about to share could be trimmed down to work on older versions of WordPress as it is. But official native support (wp_get_environment_type) for environment variables are very exciting - particularly from a coding hygiene perspective.

Some reasons you might want to utilise environments are:

- Disable automated emails on development/staging without installing new plugins and checking boxes...

- Automatically turn Woocommerce into catalogue-only mode

- Automatically alter payment gateway configurations to use test keys

- Simply display a banner to remind certain admin users "YOU ARE ON PRODUCTION - BE CAREFUL WITH CODE CHANGES"

... and a whole bunch of other stuff that you'll only start thinking of once you know how easy it is to setup.

Read more about the wp_get_environment_type() function here.

Let's automate the search engine visibility option

Going back to the original example (though let's add a third environment for fun), there are only really 2 steps.

The first is to make WordPress aware of where it is. We'll use the domain WordPress is loading on to determine which "environment" it is by adding this to the bottom of your wp-config.php (just above (# That's It. Pencils down):

// Sites:

$productionurl='403page.com';

$stagingurl='stage.403page.com';

$developmenturl='dev.403page.com';

// Exclude CLI commands from being subject

if ( ! defined('WP_CLI') && WP_CLI) {

// Set the environment type for production

if ($_SERVER['SERVER_NAME'] == $productionurl) {

define( 'WP_ENVIRONMENT_TYPE', 'production' ); }

// Set the environment type for staging

if ($_SERVER['SERVER_NAME'] == $stagingurl) {

define( 'WP_ENVIRONMENT_TYPE', 'staging' ); }

// Set the environment type for development

if ($_SERVER['SERVER_NAME'] = $developmenturl) {

define( 'WP_ENVIRONMENT_TYPE', 'development' ); }

}Don't forget to change up the domains on the first 3 lines to your own.

We're using the $_SERVER['SERVER_NAME'] variable, which is the global value of the currently loaded domain and matching it against a variable that corresponds to the domain you defined at the top of the code.

If it matches one of the 3 variables, we're setting the WP_ENVIRONMENT_TYPE (which is now a native WordPress define).

Cool! Now WordPress knows how to tell the difference between it's different environments. But how do we use it?

The second and final step is to add an mu-plugin (it can be a regular plugin, or some code added to your theme's functions.php but in this case, an mu-plugin makes most sense).

Create this file /wp-content/mu-plugins/auto-crawl-disable.php (and create the /wp-content/mu-plugins directory too if it doesn't already exist). Add this code:

<?php

/**

* Plugin Name: Auto Crawl Disable

* Plugin URI: https://403.ie

* Description: Discourage search engines from indexing this site if it's not the production version.

* Author: 403Page Labs

* Version: 0.0.1

*/

switch ( wp_get_environment_type() ) {

// If production site, allow bots to crawl

case 'production':

$discouragecrawlers = get_option( 'blog_public' );

if ($discouragecrawlers !=='1') {

update_option( 'blog_public', '1' );}

break;

// Discourage search engines from indexing if staging site

case 'staging':

$discouragecrawlers = get_option( 'blog_public' );

if ($discouragecrawlers !=='0') {

update_option( 'blog_public', '0' );}

break;

// Discourage search engines from indexing if development site

case 'development':

$discouragecrawlers = get_option( 'blog_public' );

if ($discouragecrawlers !=='0') {

update_option( 'blog_public', '0' );}

break; }You can have that identical code across all 3 environments but it will behave independently as it should on each.

On production, the option will be unchecked, giving direction to crawlers like Googlebot that they should index your pages. Whereas on staging and development, the option will be checked - telling bots to ignore them.

A brief note on Search engine visibility in WordPress

When the box is checked, WordPress adds this meta to all public pages:

<meta name=’robots’ content=’noindex,follow’ />It also serves a virtual robots.txt that contains:

User agent: *

Disallow: /