HOW TO: Cache the WordPress REST API Post endpoint in Cloudflare

This site, 403page.com, uses a decoupled method to serve WordPress content hosted on an Apache server - through to a node.js server using Frontity to render the page.

I use one server instance to host WordPress for writing the content for this site, and a second server instance with node.js to render a react.js frontend (to what you're currently looking at).

This current page you're seeing is served as static JS/CSS to your browser - with this text dynamically piped through from the Apache server (that hosts WordPress) using the REST API.

The static resources are served by the node.js server through to Cloudflare for increased performance (and security). This allows for effectively millions of visitors to load the site concurrently, using super modest server hardware.

The Apache server that serves the WordPress content however, is very much not able to serve this kind of traffic. You need a seriously expensive Apache rig, ideally with a load-balanced cluster to do this. Even still - it will struggle under high load without a caching layer in front of it.

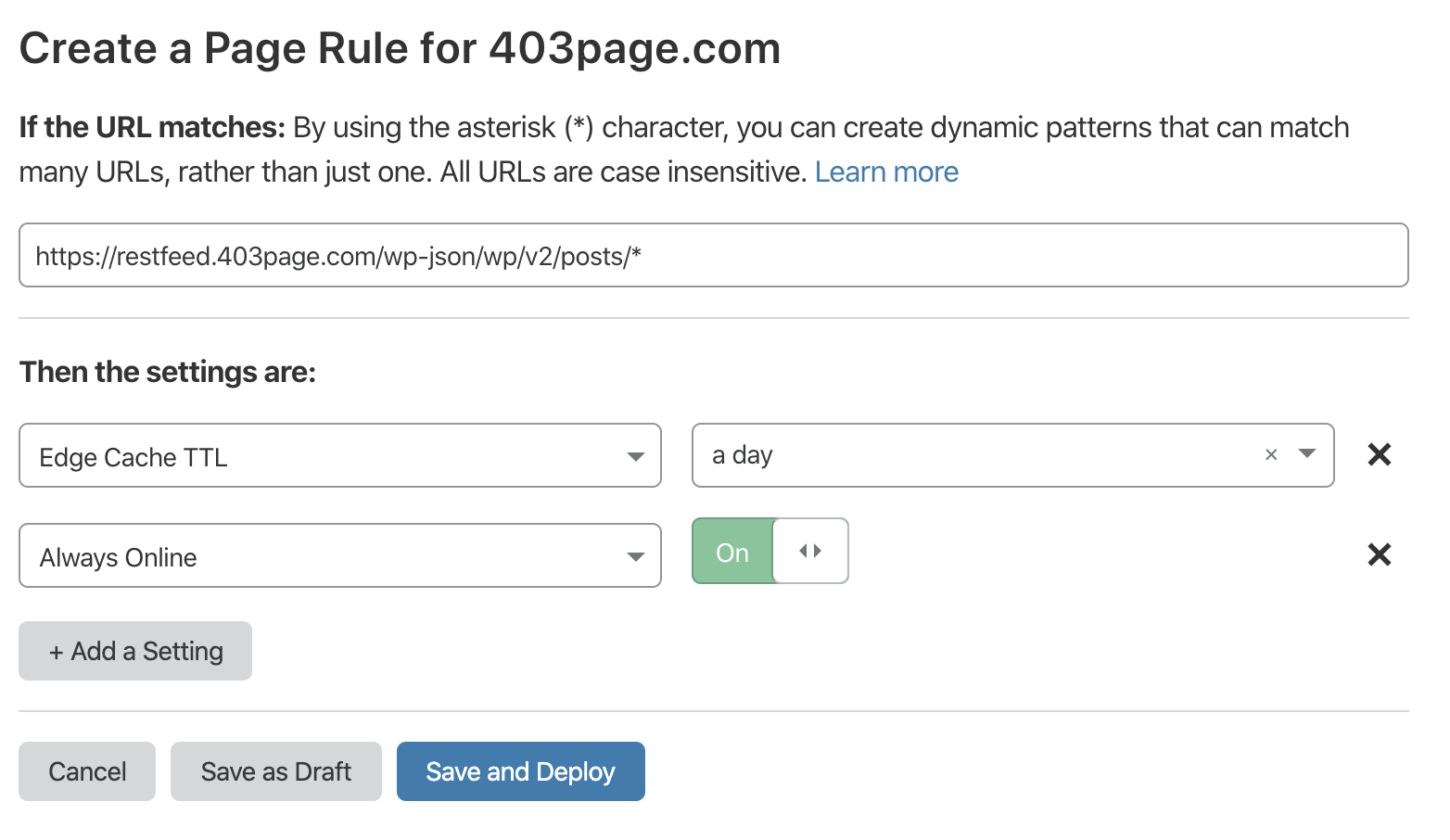

So why don't I cache the daylights out of the REST endpoint too? I'm glad you asked... that's exactly what I do! Under the Page Rules section of my Cloudflare account here's the rule I added:

Add the REST API post endpoint URL with an asterisk at the end to catch every permutation. In my case, I have the subdomain restfeed.403page.com mapped to the Apache/Wordpress server, so my endpoint is https://403.ie/wp-json/wp/v2/posts/*.

I've set the Edge Cache TTL rule to a day so requests to the endpoint update once every 24 hours, rather than when each visitor loads my site. I have also added the Always Online rule so that even if the Apache/Wordpress server can't be reached - it should still serve the last successful response.

With this method, you can probably start imagining how you're well on your way to 100% uptime, even while scaling to millions of users. For more on setting up a headless site with Frontity, check out our article here.