Handle high traffic by sanitising social media traffic in nginx

Prerequisites

a. Your public-facing server is nginx

b. You have a caching layer like FastCGI or Varnish

What are we solving here?

If you've ever run an advertising campaign directing large batches of visitors to your site, you will have noticed a large spike in CPU load - even on static pages.

Obviously.

What's not so obvious is that, often, a good chunk of the load may be unnecessary.

While using a caching layer is a must for any production website, often a good deal of traffic from social sources may bypass it completely - with each visitor nibbling at your CPU, getting a fresh page each instead of a cached version.

The reason is that most standard caching layers (like FastCGI or Varnish) will treat query strings as their own page. So while domain.com/buy-now may be cached, domain.com/buy-now/?uniqueid=123456789 isn't.

Often, social traffic will contain a unique string on the end for each referring campaign, each referring user or even one per incoming visitor - effectively making your caching layer irrelevant and leaving poor CPU to do a bunch of extra work.

With a big enough spike - it could topple your server completely.

You can modify your caching configuration to ignore query strings completely or simply instruct nginx to rewrite the incoming URI to strip the query string at the end.

THIS IS A BAD IDEA... but it looks something like this:

#Strip all query strings

if ($query_string != "") {

rewrite ^(.*)$ $uri? permanent; }This will turn domain.com/buy-now/?uniqueid=123456789 into domain.com/buy-now within nginx, which in turn will serve the cached /buy-now page. It will do this for any request with a ? at all.

But why is this a bad idea?

It will rewrite ALL query strings, potentially including requests for assets like CSS and JS that needs a query string to pull the correct version.

It's worth noting that most default caching configurations will indeed cache a request with a query string after the first hit. The problem occurs only when there's a large amount of unique query strings at the same time.

Well, what's the solution then?

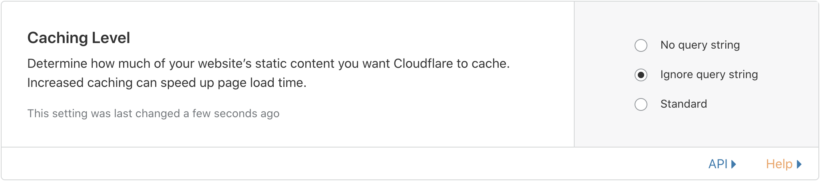

There's actually a bunch of solutions for this. The super easy cheat way is to just slap Cloudflare in front of your site and enable the Ignore query string caching option:

But that's boring!

And aside from being boring, it may not be an option for you if you or your client's site has specific DNS requirements that don't allow you to change Nameservers for the sake of caching.

Plus, if you're reading this you probably want to know how to do it yourself because you're a nerd like me. So here's what I add to nginx ahead of high traffic events (while leaving my caching configuration untouched).

# If user agent is Instagram or Facebook set a value (put whatever user agent string you want to match in the "" separated by a | )

set $socialtraffic 0;

if ($http_user_agent ~* "Instagram|Facebook") {

set $socialtraffic 1;

}

# If there's a query string set a value (except 'socialcache')

set $querystringappended 0;

if ($query_string != "socialcache") {

set $querystringappended 1;

}

# Set a new variable if both values are true (1)

set $stripsocialquery "$socialtraffic:$querystringappended";

# If both values are 1 then rewrite the URL to ?socialcache

if ($stripsocialquery = "1:1") {

rewrite ^(.*)$ $uri?socialcache? permanent;

}What this does is target specific user agents like traffic coming from Instagram and rewrites their URL so that they always ask for ?socialcache.

A request for domain.com/buy-now/?uniqueid=123456789 will become domain.com/buy-now/?socialcache.

This way, no other forms of requests are modified, potentially breaking your site (like requests for JS/CSS) while also ensuring the traffic most likely to give you a problem is cleaned up and served from cache.

But why add socialcache to end?

As mentioned above, after the first hit a request with a query string will be cached so domain.com/buy-now/?socialcache will have the same processor load on your server that domain.com/buy-now would.

But, by having ?socialcache appended to end - you can do some handy log parsing to track how many requests came through from Instagram/Facebook (or whatever user agent you targeted).

Neat!